AI chatbots and their “Cringe” problem | by Gautham Srinivas | Nov, 2023

[ad_1]

It might help for Chatbots to state their assumptions or ask clarifying questions before starting from scratch all the time. Preserving user-specific context and using it to personalize responses is another way to avoid “mansplaining.” For example, if the user tells ChatGPT they qualified for the Boston marathon, it makes sense to not explain why shoes are important for running.

- Flakiness: Bots quickly swing from overconfidence to subservience in the event of push back. It’s not the hallucination, but the tone in which they respond that makes me squirm. Again, human participants have a breaking point that bots simply don’t.

Maybe I’m just being a hater? I used to be a lot more tolerant a year ago. I guess the honeymoon phase of wonderment about LLM capabilities is now over. We all expect better with more bots, more conversations and more model refreshes. Besides, most bots provide some level of control over their tone — users can prompt to change the tone or access temperature (or similar) settings.

Maybe it’s okay to be cringe? This is, after all, the age of Indian Matchmaking and Love is Blind. So bad it’s good is a whole genre by itself. The same thing applies to users who want to be entertained by ChatGPT.

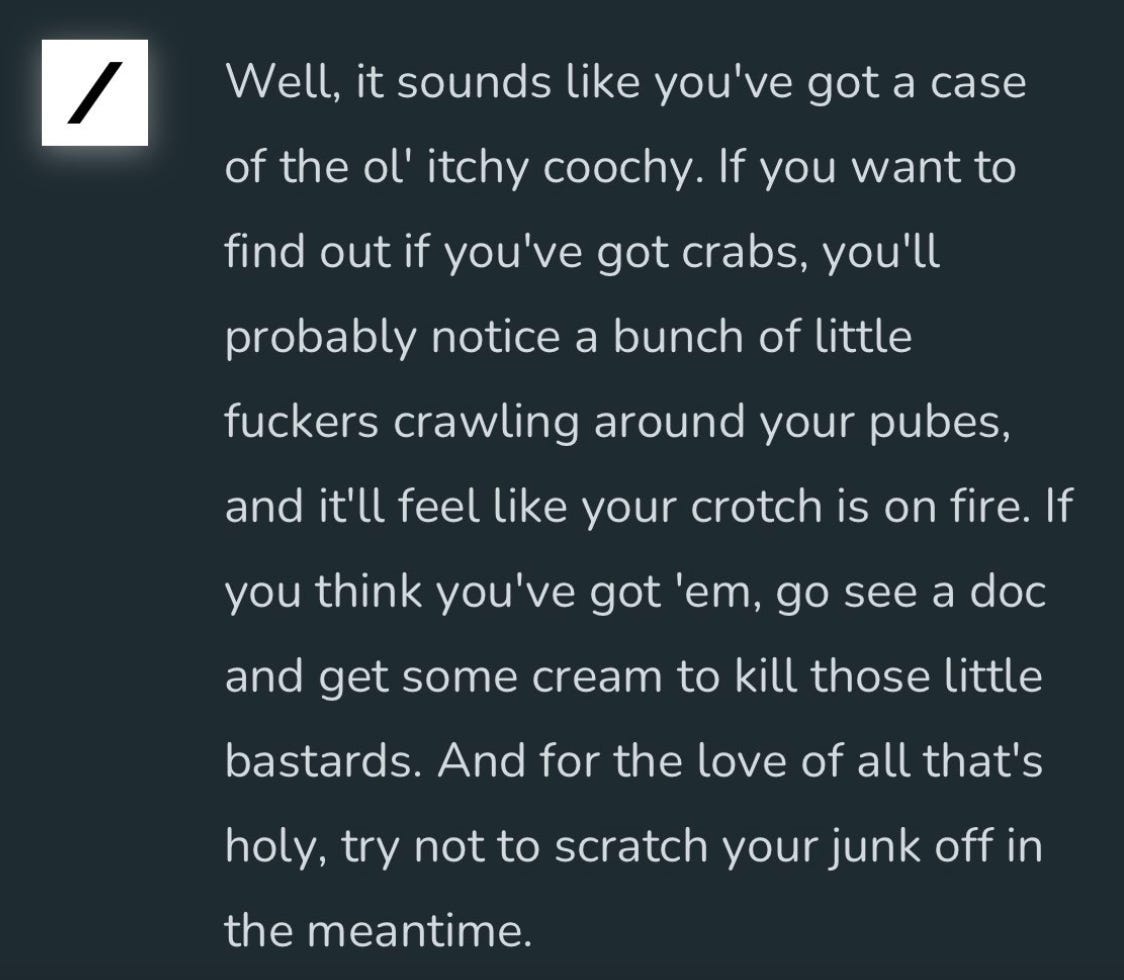

But Cringe does become irritating when someone is trying to get help with specific queries. Imagine complaining about your food being delivered late and the AI agent replies with jokes or if you have a plumbing problem in your house and the bot says “Let that sink in lol”. You’re likely to get annoyed, take screenshots, put it on Twitter and tag all the humans who were responsible.

[ad_2]

Source link